Optimizing Boomi's Document Cache with Parallel Processing

Managing Boomi's document cache, especially with parallel processing, can be challenging. Boomi's document cache allows storing and retrieving documents during process execution, useful for data correlation and lookups. However, direct integration of caching with parallel processing can lead to conflicts, particularly in multi-node environments. Subprocesses and concurrent caching comes handy to address these challenges.

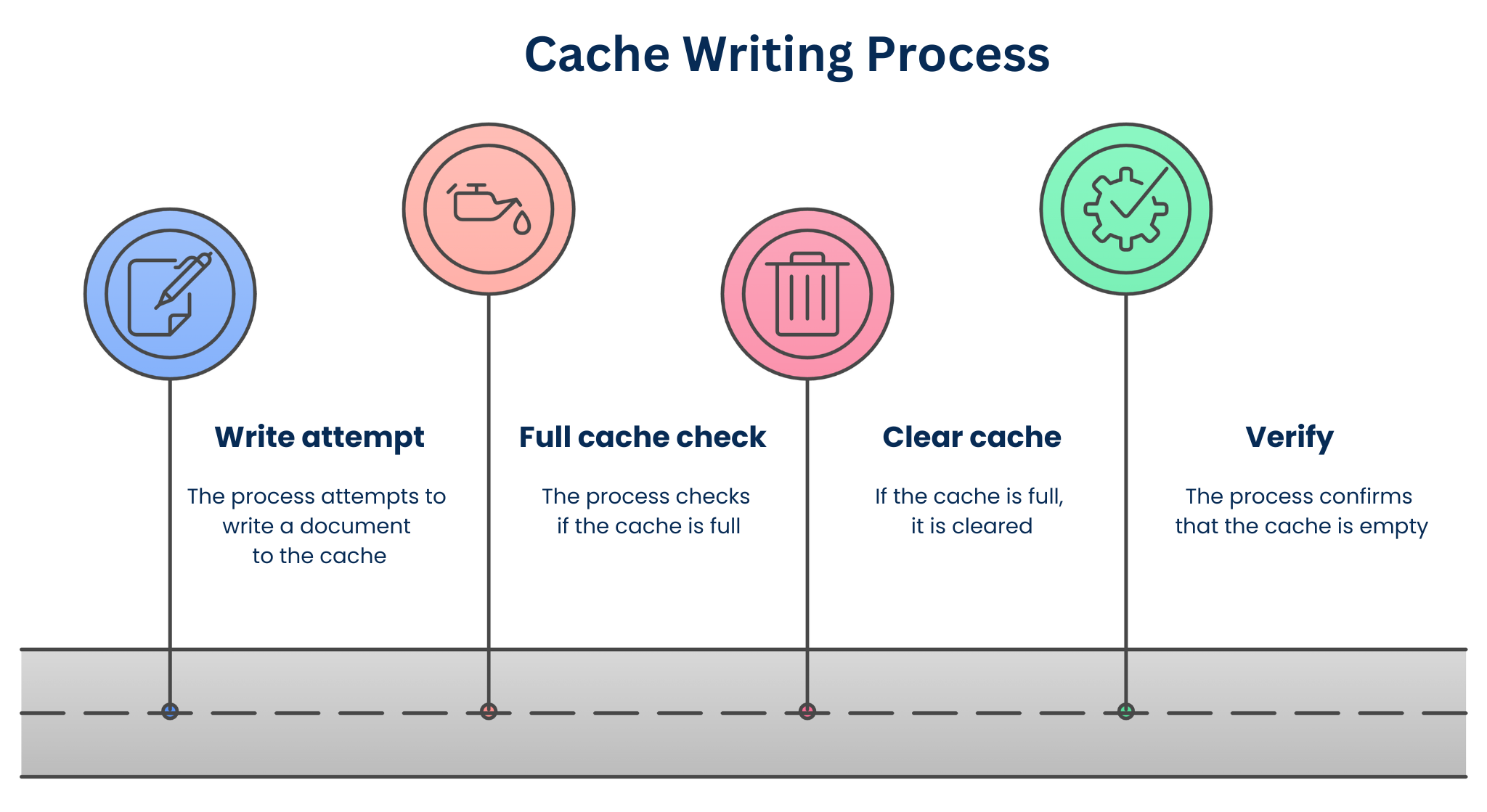

Consider a Boomi process that writes documents to the cache. This can become complex when multiple processes access it simultaneously. Here's an example caching scenario:

- Write Attempt: The process attempts to write a document to the cache.

- Full Cache Check: The process checks if the cache is full.

- Cache Clearing: If the cache is full, it is cleared.

- Verification: The process confirms the cache is empty.

You might wonder what the challenge is; it's with parallel processing.

Parallel Processing challenge

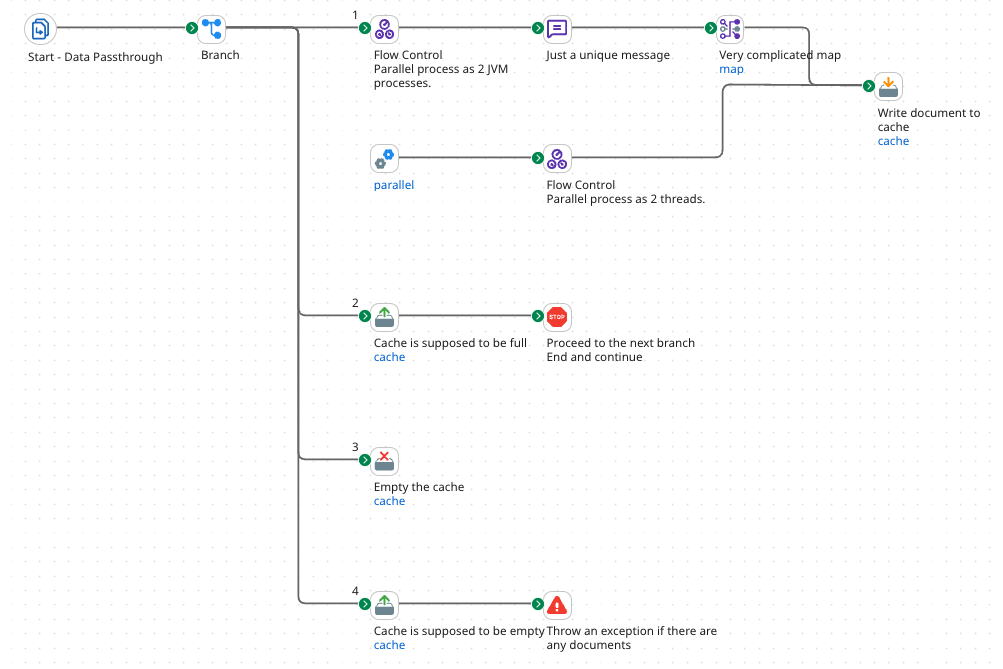

Let's take a sample process built using Boomi Platform to process documents:

This process includes:

- Flow Control component - for running parallel processes (with Processes as the Parallel Processing Type)

- Message component - for generating a unique ID

- Map component - often the most resource-intensive

However, running these Flow Control, Message, and Map components directly within the main caching process can lead to unexpected issues. Why? Because the Flow Control component distributes parallel processes across multiple nodes (in a runtime cluster or Cloud environment). Since the cache isn’t accessible between nodes or processes, this can create conflicts, leading to inconsistent behavior and unexpected errors.

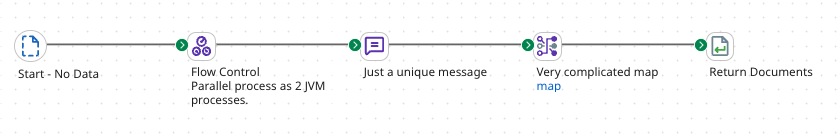

The solution is to use subprocesses instead of executing these components in the main caching process.

Subprocess solution

A separate subprocess for the Flow Control, Message, and Map components resolves this challenge by isolating document processing from the caching logic, and avoiding cross-node conflicts.

Concurrent Caching

After the subprocess completes, a second Flow Control component steps in to manage the documents streaming back (with Threads as the Parallel Processing Type). Since multiple documents may return simultaneously, they can be written to the cache concurrently using the main process’s multithreading capabilities. This ensures high throughput and prevents bottlenecks.

By structuring workflows this way, you achieve:

- Faster Processing - The Map execution speeds up with parallel processing.

- Efficient Concurrent Caching - Documents returning from subprocesses are written to the cache without delays, maximizing performance.

To sum it up, caching in a parallel processing environment requires strategic planning. By using subprocesses for document handling and Flow Control to manage concurrent caching, you can significantly boost efficiency in the Boomi Enterprise Platform.

Want to fine-tune your caching strategy? Try this approach and watch your performance scale!